Data Sparks Discovery: The 2016 NFAIS Annual Conference

By Donald T. Hawkins Freelance Conference Blogger and Editor <dthawkins@verizon.net>

Note: A summary of this article appeared in the April 2016 issue of Against The Grain v28 #2 on Page xx.

About 150 information professionals assembled in Philadelphia on February 21-23 for the 58th NFAIS Annual Conference, which had the theme “Data Sparks Discovery of Tomorrow’s Global Knowledge”. The meeting featured the usual mix of plenary addresses and panel discussions, the always popular Miles Conrad Memorial Lecture (see sidebar), and a well-received “Shark Tank Shootout”, in which representatives of four startup companies were asked a series of probing questions by a panel of judges.

Opening Keynote: Preparing the Next Generation for the Cognitive Era

Steven Miller

Steven Miller, Data Maestro in the IBM Analytics Group, reviewed the opportunities presented by data. Data is transforming industries and professions, and the demand for data engineers is skyrocketing. New data-based professions such as “data scientist”, “data engineer”, “data policy professional”, and even “chief data officer” are emerging. The Internet of Things and software analytics have been significant drivers in the emergence of data-based professions and services, for example:

The Uber ride-sharing service uses GPS data to determine where a car is and how long it will take to arrive at the customer’s location.

Trimet, the rapid transit system in Portland, OR, has teamed with Google to integrate real-time transit data with Google Maps, allowing smartphone users to easily plan their journeys. (See bit.ly/trimetandgoogle)

HackOregon is transforming public data into knowledge; for example, large sets of geology data are used in a system to help Oregon residents plan for possible future earthquakes (bit.ly/after-shock).

London has become the leading city working with open data The London Datastore (http://data.london.gov.uk/) provides access to wide range of over 500 data sets about London. Users are encouraged to contribute their ideas for solutions to London’s problems.

Kabbage (https://www.kabbage.com) has helped over 100,000 small business owners qualify for loans or lines of credit and has redefined the process of obtaining business loans.

NewRelic (http://newrelic.com/) uses predictive analytics to monitor and analyze software applications and warn developers about overloads and potential outages.

Many cities are now embedding smart sensors in roadways, buildings, waterways, etc. to send and receive data, thus improving their services.

IBM has extended its Watson system, originally developed to play Jeopardy, to help doctors diagnose patients’ illnesses. Watson for Oncology (http://www.ibm.com/smarterplanet/us/en/ibmwatson/health/) combines patient data with large volumes of medical literature to deliver evidence-based suggestions to oncologists.

Obviously, in all these systems, confidence and trust in the data are critical. Many companies have failed in their responsibility to protect data, resulting in significant breaches. Data is hard to protect because it is so complex.

Data is a core business asset, but few colleges have courses in data policy skills. In today’s cognitive era, everyone must become data literate and be able to manage and analyze data. Miller said that “T-shaped” skills (boundary-crossing competencies in many areas coupled with in-depth knowledge of at least one discipline or system) are required. Data science is multidisciplinary; there is no standard definition yet for the term “data scientist.”

A look at job listings at www.linkedin.com/jobs/data-engineer-jobs (or data-scientist-jobs) and indeed.com provides a picture of how strong the demand for such professionals is. According to Miller, there are two types of data scientist: human data scientists who advise businesses, and machine data scientists who write advanced algorithms. Chief Data Officers are responsible for understanding how to use data in strategic opportunities. There is currently a feeling of great enthusiasm and eagerness in these fields.

Data Usage Practices

Sayeed Choudhury, Associate Dean for Research Data Management at Johns Hopkins University said that much curation is required to interpret data correctly, but even crude approximations can provide good interpretations if many people are contributing the data. For example, cell phone call detail records can be used to assess regional poverty levels, migration after a disaster, etc. “Information graphs” show connections among publications, data, agents, and their properties and can be used to track connections through the scholarly communications cycle.

Courtney Soderberg from the Center for Open Science said that a reproducibility crisis is occuring in science, and the literature is not as reproducible as we would like to believe. Journals and funders have therefore implemented data sharing policies and are mandating that authors publish their raw data. Science has long focused on published results of research, but it has become important to also publish the experimental data. The Center for Open Science is working to increase openness, reproducibility, and transparency in science. It has built an open source space (see http://osf.io) where scientists can manage their projects, store files, and do research. Although private workspaces are necessary during the experimental phases of research, sharing capabilities are built into the system from the start. It is important to make it easy to share data, and not require researchers to invest time learning how to do it. They must have incentives to embrace change and be confident that they will get credit for their work.

Lisa Federer, an “Informationist” at the National Institutes of Health (NIH) Library, said that many definitions of “Big Data” revolve around the four “Vs”:

Velocity (the speed of gathering data),

Variety (many types of data),

Volume (a lot of it), and

Veracity (good data).

We do not have the ability to curate all the data we have, nor do we have social practices in place to do so. Faster and cheaper technology and an increase in “born digital” data are also providing new roles for librarians to assist researchers who have never before been required to share their data.[1] Federer estimated that data growth is expected to increase 50-fold between 2010 and 2020; leveraging unstructured data will be a significant challenge. NIH has created a publicly available planning tool to help researchers create data management plans that meet funders’ requirements, (see http://dmptool.org ) and the NIH library has developed a comprehensive guide to data services resources (http://nihlibrary.campusguides.com/dataservices).

Managing Data and Establishing Appropriate Policies

Heather Joseph, Executive Director of the Scholarly Publishing and Academic Resources Coalition (SPARC) said that data policy development is an evolutionary and iterative process involving the entire research community. It is focused on four major areas:

Policy drivers. US funders invest up to $60 billion a year in research to achieve specific outcomes, which require free access to the research results and the data. Some of the resulting benefits include stimulating new ideas, accelerating scientific discovery, and improving the welfare of the public.

Policy precedents and developments. What are the new “rules of the road” for accessing and using data? We are now thinking about data and specific ways to encourage open access. The Open Data Executive Order, issued in 2013 by President Obama, mandated open and machine-readable data as the default for all government information, and a subsequent Public Access Directive begins to lay out the rules for accessing data.

Emergence of research data policies. Today, three years after the Executive Order, draft or final policy plans have become available for 14 federal agencies. Although no standard set of policies has emerged, there are many commonalities, including protecting personal privacy and confidentiality, recognizing proprietary interests, and creating a balance between the value of preservation and costs and administrative burdens.

Policies supporting a robust research environment. Reiteration of evolutionary policy development, consistent policy tracking, and regular input are vital to promoting a reasonable level of standardization. Community collaboration will also be helpful in developing additional investments in the infrastructure that supports access to research data.

Anita De Waard, VP, Research Data Collaborations at Elsevier, discussed the research data life cycle that was developed at JISC.[2] Important steps in the lifecycle and Elsevier’s involvement include:

Collection and capture of data and sharing of protocols at the moment of capture. De Waard mentioned Hivebench (https://www.hivebench.com/), a unified electronic notebook allowing a researcher to collaborate with colleagues, share data, and easily export it to a publication system.

Data rescue. Much data is unavailable because it is hidden (such as in desk drawers). Elsevier has sponsored the International Data Rescue Award to draw attention to this problem and stimulate recovery of such data.

Publishing software. Elsevier’s open access SoftwareX journal (http://www.journals.elsevier.com/softwarex/) supports the publication of software developed in research projects.

Management and storage of data. Mendeley and GitHub provide versioning and provenance.

Linking between articles and data sets will allow a researcher to identify data sets in repositories. Data sets must therefore be given their own DOIs which will allow them to be linked to articles.

Many of the steps in the data lifecycle are problematic because researchers that they will require significant additional effort; data posting is not mandatory yet; and there is frequently no link between the data and published results. Data must be stored, preserved, accessible, discoverable, and citable before it becomes trusted. These and related concepts were discussed at the FORCE2016 Research Communication and eScholarship Conference, April 17-19, in Portland, OR.

Larry Alexander, Executive Director of the Center for Visual and Decision Informatics (VDI) at Drexel University, said that the Center has a visualization and big data analytics focus and supports research in visualization techniques, visual interfaces, and high performance data management strategies. It receives funding from several corporations and currently supports 54 PhD students. In the last 3 years, 65 patentable or copyrightable discoveries have resulted from its efforts, and it has received over $1.1 million in additional funding from NSF. Some of its noteworthy results include:

Exploration of the volume and velocity of data streams in a 3D environment,

Analysis of crime data in Chicago to find hot spots and how they change,

Prediction of flu hot spots using environmental conditions (temperature, sun exposure, etc.),

A gap analysis of US patents to predict where new breakthroughs would occur, and

Mining of PLoS ONE articles to determine popularity of software use.

The use of data is reinventing how science is performed. There are many reasons for data to be open, but it must be protected. It can become obsolete and unusable because of hardware, software, and formatting changes as well as carelessness. Hacking into systems is also a problem; technology to prevent it is needed. We must also be aware of data’s pedigree: where did it come from; has it been under control; and has anyone had the opportunity to change it? There are also many issues in the area of data quality. In the future, we will each have our own personal data picture, the “data self”, which will need to be carefully protected.

New Data Opportunities

Ann Michael, President of the consulting firm Delta Think, kicked off the second day by saying that anything to do with data is a new career opportunity. Data is popular now because of an increasing availability of digital information, robust networks that can disseminate that information, and the ubiquity of computational power to process the data. (She noted that if you have a smartphone, you have more computational power in it than NASA had when they sent a man to the moon.)

Publishing and media businesses are leveraging data today. Article impacts have become more important; companies such as Plum Analytics and Altmetrics are in the business of providing usage data for journal articles, and Springer’s Bookmetrix does the same for books. Other systems provide different applications; for example:

Impact Vizor from HighWire Press (http://blog.highwire.org/tag/impact-vizor/) looks at analytics of rejected articles and helps publishers decide if it would be worthwhile to start a new journal to accommodate them;

UberResearch (http://www.uberresearch.com) builds decision support systems for science funding organizations;

The New York Times uses predictive algorithms to increase sales and engagement (it is careful to emphasize that such data are not used to make editorial decisions);

RedLink (https://redlink.com) helps marketing and sales teams at academic publishers focus on the needs of their customers; and

Tamr (http://www.tamr.org) connects, cleans, and catalogs disparate data so that it can be used effectively throughout organizations to enhance productivity. (Thomson Reuters used it to disambiguate company names, and Wiley is using it for author and customer disambiguation.)

It sounds easy to process data, but many problems must be surmounted. Privacy policies vary in different countries, security to protect the data, standards, effects on staff (i.e. new roles and changes to existing roles), and culture must all be considered.

Building Value Through a Portfolio of Software and Systems

Three entrepreneurs followed Michael and described their products for using data. Overleaf (http://www.overleaf.com) provides a set of writing, reviewing, and publishing tools for collaborators and removes many of the frustrations that authors experience, especially when articles have many of them. Many articles today have more than one international author, and the traditional way of collaboration was to email versions of documents to them, which leads to long email chains, multiple versions of the same document, reference maintenance problems, and lengthy revision times. Now, documents can be stored in the cloud and managed by Overleaf, so most of these problems are removed.

Overleaf contains a full suite of LaTeX code, and allows authors to write interactively and see a typeset version of the article in a separate pane, which dramatically shortens preparation and reviewing time. Instructions for authors can be embedded in templates; bibliographies in a variety of styles can be created; and papers can easily be deposited in repositories. In the last 3 years, 4 million documents authored by 350,000 authors at 10,000 institutions have been created using Overleaf. The system can generate metrics, help users find collaborators, and automate pre-submission tests of manuscripts. Some journals are now receiving up to15% of their submissions from Overleaf.

Etsimo (http://www.etsimo.com) is a cloud-based visual content discovery platform combining an intelligent search engine and an interactive visual interface to a document collection. In traditional (“lookup”) searching, the user’s intent is captured only in the initial query, so the query must be reformulated if revisions are needed. Lookup searches usually result in long lists of hits, and users seldom go beyond the first few pages. Although lookup searches are sometimes successful if the user knows what they are looking for or how to formulate a query, they do not work well for finding new knowledge or discovering unknowns. Query formulation is challenging, and large numbers of hits obscure the big picture.

Etsimo works with keywords in a full-text index and uses artificial intelligence (AI) or machine learning to create connections in the content. The searcher is in complete control, and retrieval is fast and easy. In usability tests, results were improved significantly; searchers’ interactions increased; and twice as many pages were viewed. The system has been implemented successfully in a wide variety of subject areas. A demonstration is available at http://wikipedia.etsimo.com.

Meta (http://meta.com/) is a scientific knowledge network powered by machine intelligence that seeks to solve some of the common problems caused by the current flood of scholarly articles. These articles are usually dense, technical, and heavily dependent on context, so it can take a subject matter expert a few hours to read and digest an article. This process is being done every day around the world, and important decisions are often based on it. With a huge growth in the numbers of papers published (in the biomedical area alone, 4,000 new papers are published every day), valuable insights become locked in papers. The scientific information ecosystem has broken down. Researchers produce over 3 million manuscripts every year, resulting in long publication times. The only tool we currently have for coping with this flood is search, which is good when you know what you are looking for. We have high quality comprehensive databases, but we need something to explore science at the rate which it is produced.

Meta is powered by the world’s largest knowledge graph that has over 3.5 billion connections and is coupled with ontologies to unlock the information in scientific articles. It provides access to 38,000 serial titles and 19 million articles from 29 publishers and is one of the world’s largest STM text mining collections. Researchers can receive recommendations and discover unknown articles or historical landmark articles based on the concepts and people they follow. Bibliometric intelligence can be integrated into author workflows, which provides significant benefits to all parties in the publication process:

Authors can select the best potential journal in which to publish their article, based on subject fit and predicted manuscript impact. They receive faster feedback and can rapidly redirect rejected manuscripts to another journal most likely to review them.

Publishers receive submissions better matched to their journal’s subject coverage and are less likely to reject a high-impact article. They are able to publish more papers and still maintain quality standards.

Editors receive more relevant manuscripts to review, which shortens review times.

Creating Value for External Institutions and Systems

Carl Grant from the University of Oklahoma said that everyone needs access to existing knowledge to incubate new ideas. We must facilitate “wow” moments, but there are barriers in the way: we have an overload of data and information in silos which have proprietary interfaces on top of them and legal language that impedes negotiations for access. Information authentication systems do not communicate with each other, forcing users to log off and on when they access different systems. Librarians need to develop data governance policies for their institutions and install the resulting infrastructure across all internal silos, create open access repositories, and develop networks.

James King, from the NIH library, described its vision to be the premier provider of information solutions by enabling discovery through its “Informationist” program (see http://nihlibrary.nih.gov/Services/Pages/Informationists.aspx), in which information professionals are embedded into NIH workflows to focus on delivering knowledge-based solutions and providing support for:

Bibliometric analysis: assessment of each institute to discover the impactful authors, diseases impacted, productivity measurements, and citation impacts, and

Collection assessment and analysis: e-resources management, document delivery requests, document-directed acquisition instead of library selection, and maintenance of a database of publication and usage information.

A unique feature of the Informationist program is its custom information services: a “Geek Squad” that provides support to informationists by offering digitization of government publications, database access via APIs, and consulting services. Examples of custom information services include creation of an International Alzheimer’s Disease Research Portfolio, a 6,000-row spreadsheet that was converted into a Drupal-based database with an accompanying taxonomy; and an Interagency Pain Research Portfolio containing 5,000 historical publications in 17 different languages that was converted to a virtual research environment.

Miranda Hunt, User Experience Researcher at EBSCO, said that it is important to understand the context of research when choosing the right methods for a research project. EBSCO has used both quantitative and qualitative data to conduct usability research on its products and partners. Techniques included interviews with key users, card sorting to help develop the navigational structure of a website, and surveys. It is important to think about access to people and their availability when designing these research studies, as well as stakeholder expectations. Practice runs are also important to ensure that the studies will be conducted successfully.

Simon Inger

How Readers Discover Content in Scholarly Publications

At the members-only lunch, Simon Inger presented the results of a large survey that stemmed from the recognition that search and discovery do not happen in the same silo. Navigation through journal articles is very different from books, which are driven by business models and protection technologies. Libraries generally buy e-books by the silo because they have their own URLs and their own websites.

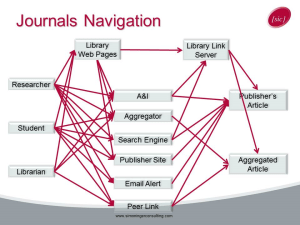

For journals, there are many ways of discovering articles. For example, this figure shows how some academic readers might navigate to various incarnations of the publisher’s article.

However, publishers cannot get a complete picture of access to their content because they can see only the last referring website; in contrast, libraries can view the complete access paths of the users, as these diagrams show.

We generally do not know where people start their research, so Inger’s consulting company conducted a large survey of reader navigation in 2015 to gain a view of the importance of access channels to information publishers and buyers. The study took 18 months to plan, conduct, and analyze. Support for the survey was obtained from about a dozen publishers and content providers; about 2 million invitations were sent to potential respondents, and 40,000 responses were received from all over the world (there was even one from the French Southern and Antarctic Lands!).

Survey results from the 2015 respondents were combined with nearly 20,000 responses from an earlier survey in 2012. Literally millions of hypotheses can be tested using an analytical tool. Sponsors will receive access to the full set of data and the analytical tool; a summary of the main conclusions is freely available at http://sic.pub/discover. Analysis and updating of the findings is continuing and will become available throughout 2016. Here are some of the findings:

It is important to note that publishers always report that they receive more traffic from Google than from Google Scholar, but traffic from Google Scholar typically comes from link resolvers which are not the original source of the research. Inger concluded his presentation by noting that understanding the origins of reader navigation helps publishers, libraries, indexing organizations, and technology companies to optimize their products for different sectors across the world.

Globalization and Internationalization of Content

This session followed up on one last year’s NFAIS meeting, at which attendees indicated that one of the largest influences on scholarly information was the growing global demand for information, particularly from China and the Asia-Pacific area.

James Testa, VP Emeritus, Thomson Reuters, noted that the 10 countries with significant growth in journal coverage in the Web of Science (WoS) database each added at least 40 journals to their coverage in the last 10 years. Testa presented some interesting statistics on these 10 countries: China, Spain, and Brazil have all increased their coverage; coverage of Turkish journals has grown from 4 to 67 journals, and China has quadrupled its annual output to about 275,000 articles in 2015. Here are his interpretations of this data:

Many obscure journals were revealed with the introduction of the internet, and the WoS user base became more internationally diverse, so Thomson Reuters began to add more journals to its coverage.

Australia is ranked first of those journals by citation impact, probably because its journals all publish in English.

By citation impact, Chinese journals rank in 7th place, which is an indication of their lower quality. Chinese authors receive rewards for publishing in journals covered by the WoS, but their articles tend to be shallow in inventiveness and originality.

Serious side effects of globalization include the practice of rewarding scholars disproportionately for publishing in high-impact journals, a lower regard for peer review, and excessive self-citations.

Major progress in communication of scholarly results has been achieved, but efforts to gain higher rankings by unethical behavior are suspect.

Secondary publishers must demonstrate that their procedures to remove questionable entries from their publications are effective.

Stacy Olkowski, Senior Product Manager at Thomson Reuters said that patents contain extremely valuable technical information; the claims are like recipes in a cookbook. Some 70% of the information in patents cannot be found anywhere else in the research literature. Patents are more than just technical documents and can provide answers to marketing and business information questions such as “What is the competition doing?”, “Why are they devoting resources to a technology”, and “What is happening in other countries?”

Focusing on China, Olkowski said that there has been an incredible growth in the numbers of papers being published in Chinese journals, and the same trend has occurred with Chinese patents. The Chinese government initially questioned whether they should establish a patent office, but they did so in 1984, and now it is first in the world in numbers of patent applications with an annual growth rate of about 12.5%. About 85% of the applications to the Chinese patent office are from Chinese nationals. Since 2003, the Chinese government has been paying people to apply for patents; about 40% of the applications are granted and become patents.

Donald Samulack, President of US Operations for Editage (http://www.editage.com), presented a concerning picture of the globalization of the Chinese published literature. He said that Western publication practices have typically been built on trust and rigorous peer review, but there has been a tsunami of articles from China, and there is an entrepreneurial element of commerce in every part of Asian society, which has led to an erosion of this trust and honesty. Samulack is worried that getting a good paper published in a reputable journal will become increasingly difficult. There are irresponsible and in some cases predatory commercial elements in the Chinese publication process, such as authorship for sale (see http://scipaper.net), plagiarism, writing and data fraud, paper mills, hijacked and look-alike journals, and organizations that sell fake impact factors and misleading article metrics. Without appropriate guidance regarding publication ethics and good publication practices, Chinese researchers fall prey to these scams. In 2 years, he thinks that authors will find it very difficult to identify unethical practices because they will have become mainstream.

In response to unethical publication practices that led to the retraction of many articles by Chinese authors, the China government has recently issued a policy of standard conduct in international publication which forbids Chinese scientists from using third parties to write, submit, or edit manuscripts, providing fake reviewer information, or acting against recognized authorship standards. According to Samulack, some scientists have been removed from their academic position and forced to repay grant money to the government. He and others have proposed the formation of a Coalition for Responsible Publication Resources (CRPR, http://www.rprcoalition.org/) to recognize publishers and vendors that are “vetted as conducting themselves and providing services in alignment with current publishing guidelines and ethical practices, as certified through an audit process…” so that authors can readily identify responsible publication resources. Articles describing efforts to combat unethical publication activities been published in Science, Nature, and on the Editage website (http://www.editage.com/insights/china-takes-stern-steps-against-those-involved-in-author-misconduct).

Shark Tank Shoot Out

The final day of the NFAIS meeting began with a very informative session in which four entrepreneurs briefly described their companies and products and then were subjected to questioning by three judges: James Phimister, VP, ProQuest Information Solultions; Kent Anderson, Founder, Caldera Publishing Solutions; and Christopher Wink, Co-Founder, Technical.ly. The questions were very intense and probing and mainly centered around the companies’ business models (they reminded me of the process one goes through when taking oral exams for a PhD degree!). Here are summaries of the four companies.

Expernova (http://en.expernova.com/) finds an expert to solve a problem by accessing a database of global expertise that contains profiles of 10 million experts and 55 million collaborations. The company has customers in 12 countries, has earned $775,000 in revenue and is profitable. It experienced 56% growth in 2015.

Penelope (http://www.peneloperesearch.com/) reviews article manuscripts, detects errors such as missing figures or incorrect references, and checks for logical soundness, statistics, etc. to eliminate errors and shorten review times. The company is in a very early startup phase and is supported by UK government grants.

Authorea (https://www.authorea.com/) is collaborative writing platform for research. Writing and dissemination are obsolete, slow, and inefficient; today’s 21st century research is written with 20th century tools and published in a 17th century format. Today’s writing tools are intended to be used by one person working offline. Authorea contains an editor for mathematics equations, makes it easy to add citations, comments, etc., and provides 1-click formatting to create and export a PDF of the completed article. The company has 76,000 registered users who have written about 3,000 articles. A selection of published articles written on Authorea is available at https://www.authorea.com/browse.

ResearchConnection (https://researchconnection.com/) is a centralized database of university research information that allows students to search for prospective mentors and is searchable by location, university, and subject. The database is created from public information; universities can upload their research programs. So far, 41 universities are participating, and an advisory board has been formed to help with data, university contacts, finances, and educational technologies. ResearchConnection’s target market is the top 200 US universities and 3 million students seeking advisors and applying to graduate schools.

At the end of the session, the judges declared Authorea the winner of the shoot out because of its network potential, freemium business model, and likelihood of attracting investors.

Leveraging Data to Build Tomorrow’s Information Business

Marjorie Hlava, President, Access Innovations, said there are 3 levels of AI:

Artificial narrow intelligence is limited to a single task like playing chess.

Artificial general intelligence can perform any intellectual task that a human can, and

Artificial superintelligence, in which the machine is smarter than a human, is the realm of science fiction and implies that the computer has some social skills.

She also noted that computing has a new face now and is not accelerating at its former pace; Moore’s Law was broken in 2015.

What about AI in publishing? The major AI technologies include computational linguistics, automated language processing (natural language processing, co-occurrence, and inference engines, text analytics, and automatic indexing), and automatic translation. Semantics all underlie these systems which work more accurately with a dictionary or taxonomy. All these technologies are based on available knowledge; we need to move forward with our automation systems.

Access Innovations is pushing the edges of AI and is developing practical applications for publishers. Concepts are the key and can be mined from the metadata. Along with topics and taxonomies, they make publishers’ data come alive in the AI sense. Support for Level 1 AI includes concepts, automatic indexing, and discovery. Semantic normalization tells us what the content is about, so we can now issue verbal commands, retrieve relevance results, filter for relevance to the requester, and sometimes give answers. We have begun to approach Level 3, especially in medical diagnosis systems.

Expert System (http://www.expertsystem.com/) develops software that understands the meaning of written language. Its CEO, Daniel Mayer, said that publishers have enormous archives of unstructured content and are looking for ways to exploit it and turn it into products. They want to help users find information faster and easier, focus on the most relevant content, find insights, and make better decisions. Faceted search, a recurring feature of online information products is supported by taxonomies and offers users an efficient way to access information. It also helps in disambiguation. Content recommendation engines let users discover things unknown to them using AI technologies. The end goal is to provide a faster way of getting to an answer, not just to the content.

AI is only a tool and will not replace humans in the future, but we can use it to our benefit. Typically, one connects the dots between different entities and creates an ontology and defines the relationships between the entities (known as “triples”). To leverage the value of a taxonomy, an integrated semantic workflow is key because it can automate indexing, make work easier, and reduce the cost of ownership.

Lee Giles, Professor at Pennsylvania State University, defined scholarly big data as all academic or research documents, such as journal and conference papers, books, theses, reports, and their related data. Examples of big data are Google Scholar, Microsoft Academic Search, and publisher repositories. Sources of scholarly big data include websites, repositories, bibliographic resources and databases, publishers, data aggregators, and patents.

Research on scholarly big data follows many directions: data creation, search, data mining and information extraction, knowledge discovery, visualization, and uses of AI. Machine learning methods have become dominant. For example the CiteSeerX system (http://csxstatic.ist.psu.edu/about) has a digital library and search engine for computer and information science literature and provides resources to create digital libraries in other subjects. It can extract data from tables, figures, and formulas in articles and has a wide range of leading edge features. Some of the technologies used in the system include automatic document classification, document deduplication, metadata extraction, and author disambiguation.

Closing Keynote: AI and the Future of Trust

Stephane Bura

Stephane Bura, Co-Founder, Weave (http://www.weave.ai/) said that trust is a guiding principle and will have the most impact on our information systems. The main context in which we use trust is the security of our data and whether it is private or sufficiently anonymized. He illustrated these concepts in the context of video games, which are designed to cater to players’ emotions by using their motivations. Extrinsic motivations come from outside of us; we experience them when we choose to use a service. But the real motivations that drive us are intrinsic:

Mastery: the desire to be good, or competence. You trust that if you learn to use the service, you will be rewarded and will not need to learn how to use it again when you return to it. We want the system to allow us to experiment in a safe place.

Autonomy: the desire to be the agent in your life, set your goals, and reach them. We trust services to be fair and truthful in our lives and expect they will provide solutions to our problems.

Relatedness: the desire to connect and find one’s place in the community. It involves relationships with the system and with others through the system. Users and systems model each other and give feedback. We trust systems to know why we are unique and why we are using them. Trust and relatedness will be the next big thing in personal recommendations.

Photos of some of the attendees at the meeting are available on the NFAIS Facebook page. The 2017 NFAIS meeting will be in Alexandria, VA on February 26-28.

SIDEBAR

The Miles Conrad Memorial Lecture

Deanna Marcum

Long time attendees at NFAIS annual meetings will know that the Miles Conrad Memorial Lecture, given in honor of one of the founders of NFAIS, is the highest honor bestowed by the Federation. This year’s lecturer was Deanna Marcum, Managing Director of Ithaka S+R (http://www.sr.ithaka.org/), who was previously Associate Librarian for Library Services at the Library of Congress. She presented an outstanding and challenging lecture on the need for leadership changes in academic libraries in today’s digital age. The complete transcript of Marcum’s lecture is available on the NFAIS website at https://nfais.memberclicks.net/assets/docs/MilesConradLectures/2016_marcum.pdf.

Marcum said that the library used to be an end to itself, but now it needs to facilitate access to the full web of accessible resources. We have moved beyond simply providing support for searches and are educating students on web technologies. Automation has given us the tools to put resources in the hands of students, scholars, and the general public; all libraries are digital now, so they have become leaders in the digital revolution. But there is more to do; academic libraries must make dramatic changes, and a different kind of leadership is necessary, especially at the executive level.

According to Marcum, most academic library executives have at least one foot in the print world and have been trained to focus on local collections. However, a national and global mindset is essential, which requires a different kind of leadership. Leaders must have the capacity to channel the right knowledge to the right people at the right time. They must invest in services that users really want, instead of just making catalogs of their collections. Young librarians currently entering the profession are not interested in waiting several years to make a contribution; they want to make a difference immediately. Pairing them with people who know the print collection will help create new and innovative services.

Marcum applied 10 practices of digital leaders that were found in a study of successful digital organizations[3] to the library profession:

Build a comprehensive digital strategy that can be shared repeatedly. Users need immediate access to electronic information.

Embed digital literacy across the organization. Librarians must know as much about digital resources as they do about print ones.

Renew a focus on business fundamentals. We must integrate digital and legacy resources to give currency to our mission.

Embrace new rules of customer engagement. Users are now in control and can decide what is most important and how much it is worth. Many libraries have taken on aspects of cultural institutions and have made the library a welcoming place for students’ broad needs.

Understand global differences in how people access and use the internet. We must provide services to a widely diverse population.

Develop the organization’s data skills. Leaders must rely on data-driven decisions instead of past practices.

Focus on the customer experience. Design services from the customer’s perspective; there is no “one size fits all”.

Develop leaders with skill sets that bridge digital and traditional expertise. Invest the time to learn about digital technologies and the opportunities they present. Help staff on both sides of the digital divide see the value the other brings. New staff will be impatient with old rules.

Pay attention to cultural fit when recruiting digital leaders. Minimize silos and focus on customers. Empower leaders who can advance digital objectives in an inspirational rather than a threatening way.

Understand the motivations of top talent. Make it attractive to remain with the organization by making sure that there is excitement in the library.

Libraries are at a pivotal point now, and survival depends on becoming a node in a national and international ecosystem. Information needs are enormous and vast; digital technology has opened the doors for us.

Donald T. Hawkins is an information industry freelance writer based in Pennsylvania. In addition to blogging and writing about conferences for Against the Grain, he blogs the Computers in Libraries and Internet Librarian conferences for Information Today, Inc. (ITI) and maintains the Conference Calendar on the ITI website (http://www.infotoday.com/calendar.asp). He is the Editor of Personal Archiving, (Information Today, 2013) and Co-Editor of Public Knowledge: Access and Benefits (Information Today, 2016). He holds a Ph.D. degree from the University of California, Berkeley and has worked in the online information industry for over 40 years.

[1] See Federer’s article, “Data literacy training needs of biomedical researchers”, J Med. Libr. Assoc., 104(1): 52-7 (January 2016), available at http://www.ncbi.nlm.nih.gov/pmc/articles/PMC4722643/. Also see

http://data.library.virginia.edu/data-management/lifecycle/, which describes the data management lifecycle and roles librarians can play.

[2] “How and why you should manage your research data: a guide for researchers”, https://www.jisc.ac.uk/guides/how-and-why-you-should-manage-your-research-data

[3] http://www.slideshare.net/oscarmirandalahoz/pagetalent-30-solving-the-digital-leadership-challenge-a-global-perspectives